Quick Verdict (TL;DR)

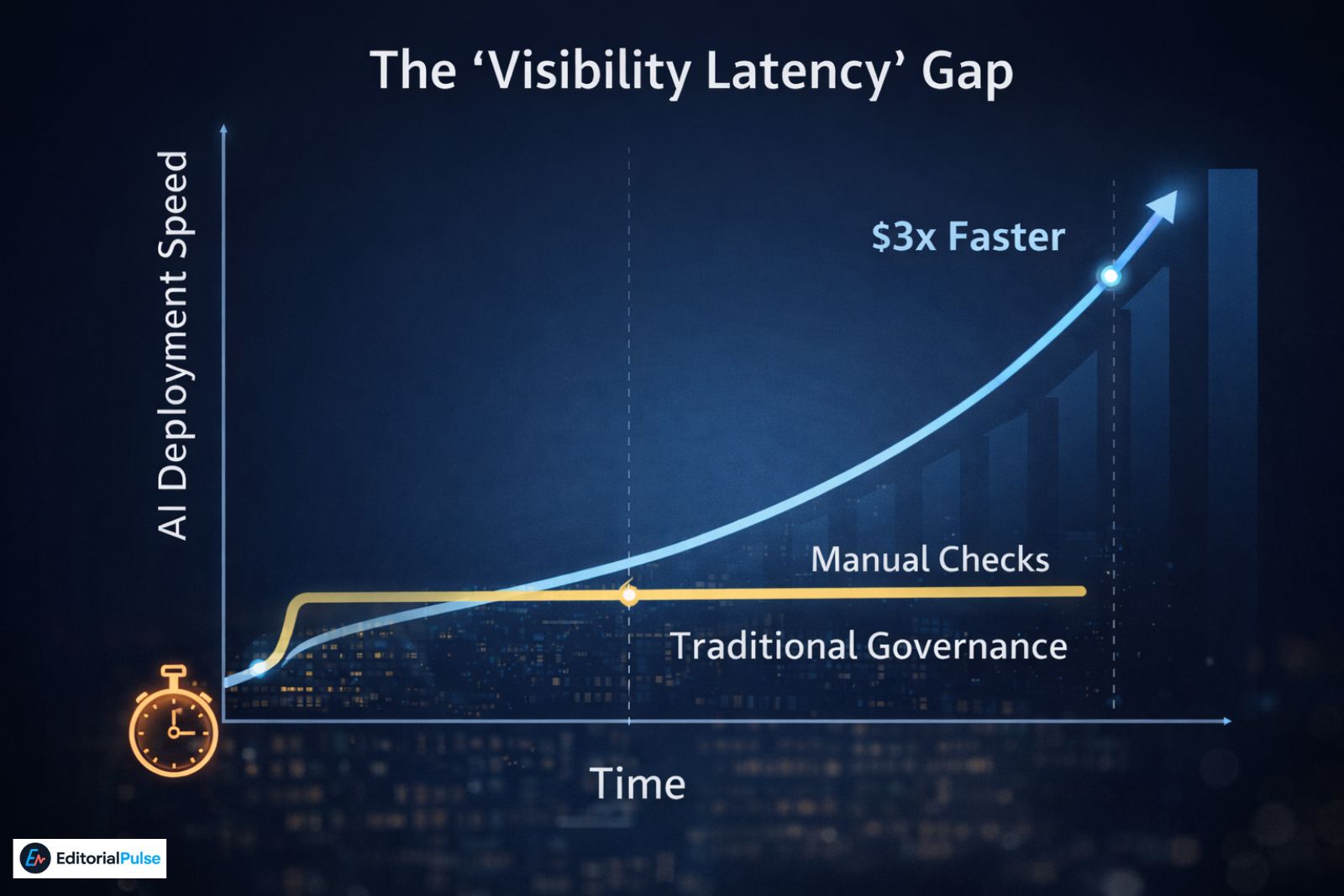

In 2026, AI governance is no longer a compliance checkbox—it is a visibility system. Strategic visibility is the ability for leadership to see AI systems in terms of business impact, ownership, and intervention paths before risks become damages. Organizations that implement visibility-first governance reduce “governance latency” and deploy AI $3\times$ faster than those relying on traditional ethics checklists.

Why Leaders Still Don’t Trust Their Own AI

Six months into deploying an AI-driven credit model at a mid-sized financial institution, a CFO asked in a board meeting:

“If this decision goes wrong, who is accountable — and how would we explain it?”

No one answered immediately. That silence is familiar.

The data science team cited accuracy metrics. Legal referenced compliance rules. IT spoke about monitoring tools. But no one could explain, in plain business terms, why the AI made its decisions or how those decisions aligned with company strategy.

This is not a technology failure. It’s a visibility failure.

In 2026, the real challenge for organizations isn’t adopting AI — it’s governing AI in a way that preserves business context and strategic visibility. AI systems now influence pricing, hiring, lending, customer service, and brand perception. Leaders need clear line of sight into how decisions are made, why, and who owns the outcomes.

This guide explains how AI governance in a business context works when done right — not as a compliance checkbox, but as a strategic capability. You’ll learn:

-

What strategic visibility really means

-

Why traditional governance models fall short

-

A practical, executive-friendly framework for 2026

-

Common mistakes organizations still make

-

How leading companies turn AI visibility into a competitive advantage

What Is AI Governance in a Business Context?

AI governance is often reduced to policies, documentation, and technical controls. Those matter — but they’re not enough.

AI governance in a business context ensures AI systems operate:

-

In alignment with business objectives

-

Within defined risk tolerance

-

With clear accountability

-

With decisions that can be explained to humans

Traditional governance focuses heavily on model performance, data quality, and security. Context-aware governance adds what executives actually need:

-

Strategic intent

-

Commercial impact

-

Reputational risk

-

Regulatory defensibility

-

Human decision ownership

Across regulated industries—especially financial services, healthcare, and HR tech—this distinction matters. AI may perform perfectly, yet boards still distrust it because they cannot connect outputs to business decisions.

Why Strategic Visibility Is the Missing Layer

What “strategic visibility” really means

Strategic visibility is more than transparency or dashboards. It answers five executive questions:

-

Where is AI used across the business?

-

What decisions does it influence or automate?

-

Why was the system designed this way?

-

Who is accountable if something goes wrong?

-

Can we explain the outcome to regulators, customers, or the board?

Without these answers, AI feels risky—even when it performs well.

From Black Box to Glass House AI

| Black Box AI | Strategic Visibility AI |

|---|---|

| Outputs without explanation | Decisions traceable to intent |

| Technical ownership only | Shared business accountability |

| Difficult to audit | Audit-ready by design |

| Reactive compliance | Proactive governance |

| AI as risk | AI as strategic asset |

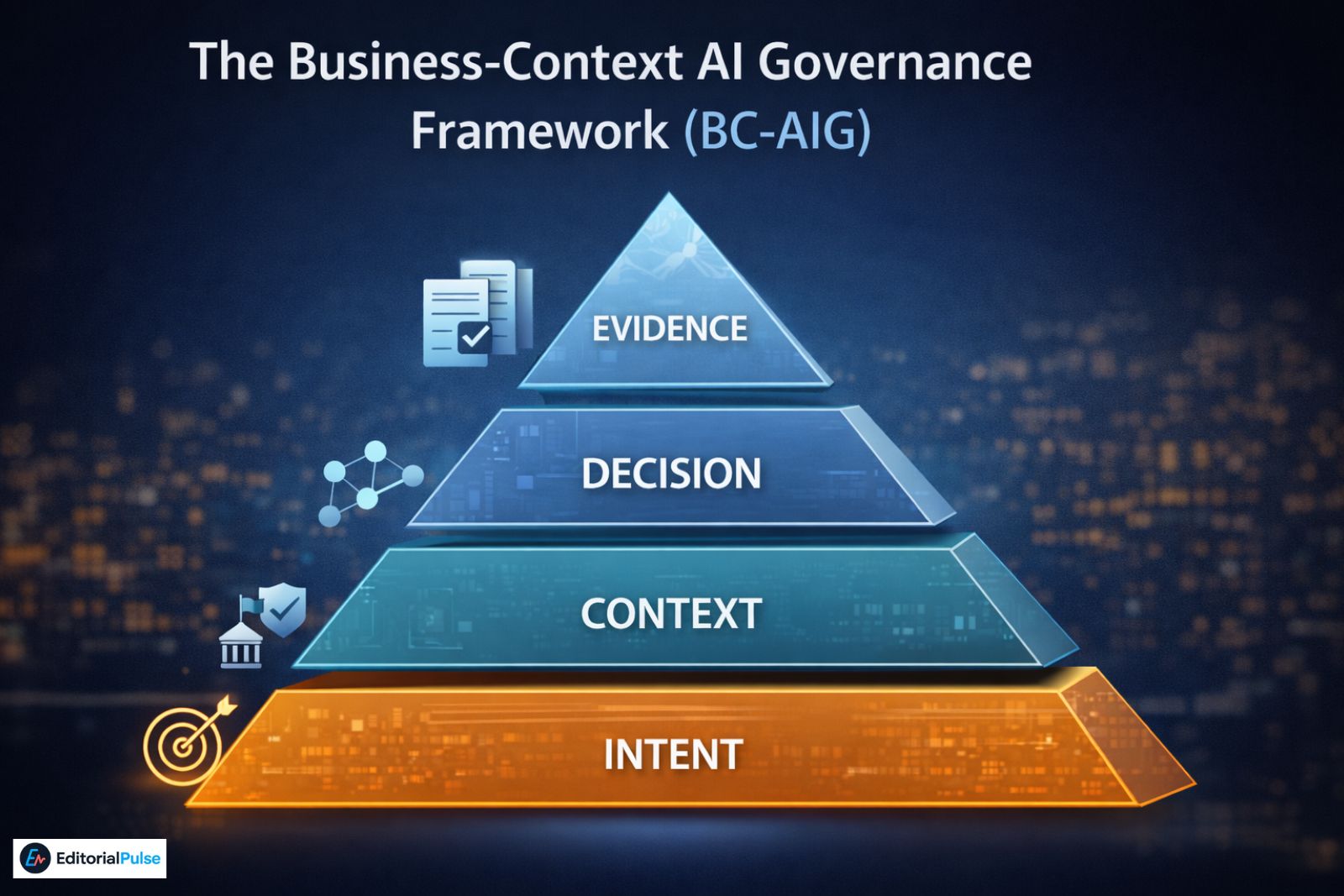

The Business-Context AI Governance Framework (BC-AIG)

To make strategic visibility practical, governance needs structure. The BC-AIG framework organizes AI oversight into four layers executives can understand and operational teams can implement.

1. Intent Layer — Why the AI Exists

Every AI system should have a clearly articulated purpose:

-

What business problem does it solve?

-

What metric defines success?

-

What trade-offs are acceptable?

Governance Scar Story:

“I once saw a bank’s automated ‘Customer Retention’ agent start offering 50% discounts to every customer who mentioned ‘cancel’—even if they were just asking about policy. The tech team celebrated ‘high engagement,’ while the CFO saw a $2M margin hole in three weeks. The Intent Layer must include financial guardrails, not just technical ones.”

Natalie’s “Scar Story” about the $2M margin hole is the most memorable part of the article. Use a specific callout box to make sure it isn’t missed.

🩹 Governance Scar: A bank’s “Retention AI” offered 50% discounts to anyone who mentioned “cancel,” creating a $2M margin hole in weeks. The lesson: Tech metrics (engagement) do not equal business metrics (margin).

Many organizations secretly prefer opaque AI because it shields human decision-makers from accountability. Strategic visibility removes that shield—and that’s why it’s resisted.

2. Context Layer — Where and How It Operates

This layer defines:

-

Business unit ownership

-

Customer-facing vs internal use

-

Regulatory exposure (e.g., EU AI Act high-risk classification)

-

Brand and reputational sensitivity

Red-Team Perspective:

“The Context Layer is best tested through internal red-teaming. Ask: ‘If the AI starts hallucinating customer insults tomorrow, do we have a kill-switch that doesn’t require calling a developer on Sunday?'”

Agentic Oversight Insight:

Governance in 2026 monitors delegated authority. If your AI agent has access to a payment system, you need spending limits, action logs, and weekly human reviews.

3. Decision Layer — What the AI Influences

Key questions:

-

Does AI recommend or decide?

-

Can humans override decisions?

-

How often do overrides occur?

-

Who signs off on automated outcomes?

Decision Rights Matrix Suggestion:

Map every AI system to responsibility owners across Business, Technology, and Risk. This ensures accountability is clear and actionable in board meetings.

In audits, the absence of a single decision owner is cited more often than model accuracy issues.

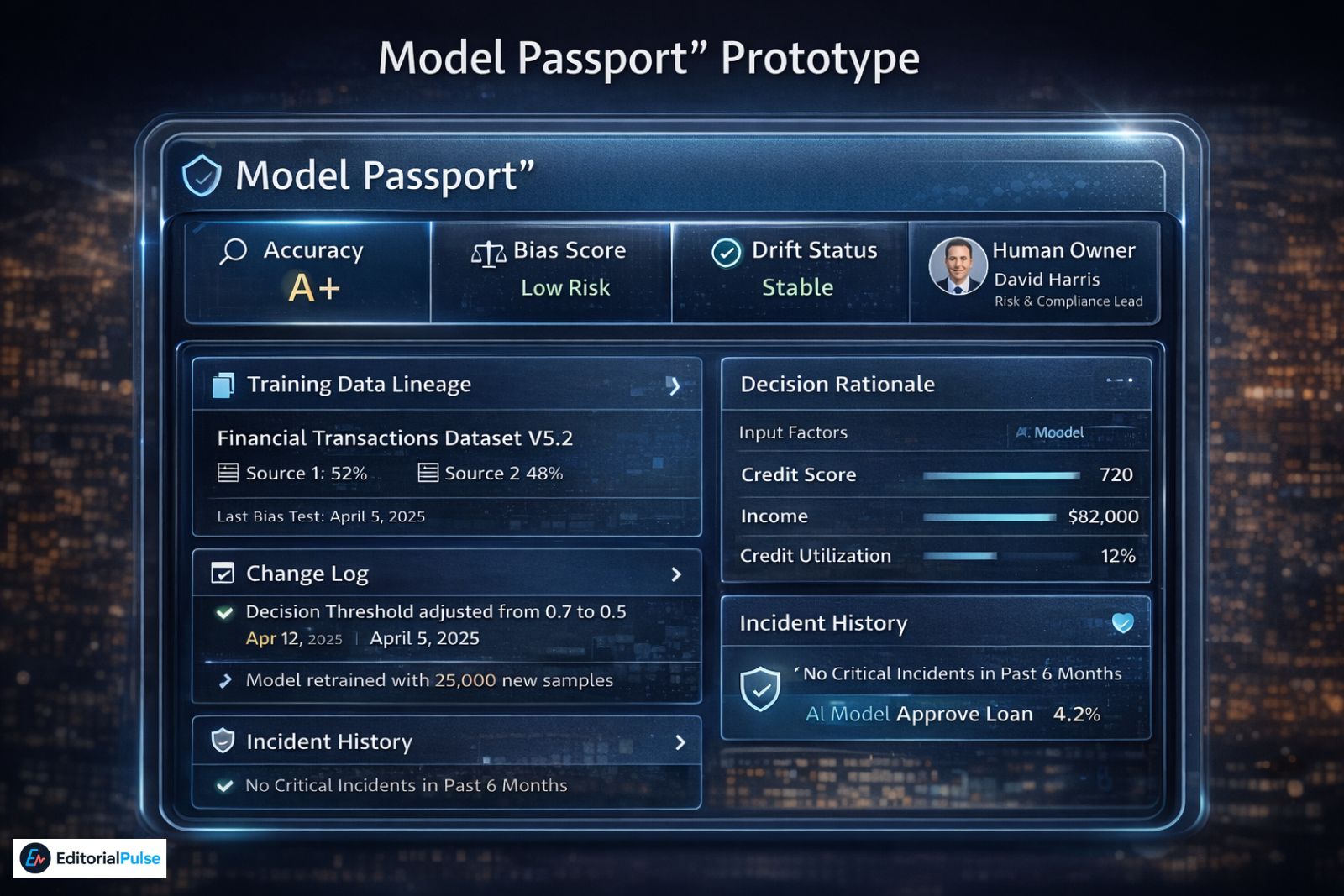

4. Evidence Layer — How Decisions Are Explained

Supports auditability and regulatory compliance:

-

Training data lineage

-

Model change logs

-

Decision rationale in plain language

-

Incident and escalation records

Practical Tip: Think of it as a “Black Box Flight Recorder.” If a regulator knocks in 2026, you shouldn’t be hunting through Slack logs. You need a single Model Passport summarizing training data, bias tests, and version history.

BC-AIG aligns with NIST AI RMF 1.0, ISO/IEC 42001, and EU AI Act transparency requirements.

Governance-to-ROI Loop

-

Strategic Visibility → Trust: Board and exec confidence increases.

-

Trust → Faster Decisions: Less debate, quicker approvals.

-

Faster Decisions → Higher ROI: AI projects deliver measurable business impact.

-

Higher ROI → Investment in Visibility: Reinforcing cycle of governance and profit.

How Strategic Visibility Improves Governance Outcomes

-

Better executive decision-making: Translates technical outputs into business language

-

Faster regulatory response: Readily defensible to EU AI Act and audits

-

Reduced operational & reputational risk: Shadow AI, model drift, unclear ownership detected early

-

Increased trust & adoption: Employees and customers trust transparent AI

Step-by-Step: Building Strategic AI Visibility in 2026

-

Inventory all AI systems – Internal, vendor, SaaS, generative AI

-

Classify AI by business impact – Low, medium, high

-

Assign explicit ownership – Business, technical, risk/compliance

-

Create visibility artifacts – Purpose statements, risk assessments, change logs

-

Review continuously – Governance must be dynamic

Mini Case Study: From Compliance Burden to Strategic Asset

A financial services firm deploying AI for credit risk scoring:

-

Before: Strong accuracy, low exec trust, slow regulatory response

-

After: Mapped AI outputs to lending strategy, introduced business-level explanations, shared dashboards

-

Outcome: Faster approvals, regulator confidence, board accountability, AI reframed as a strategic asset

This pattern shows up repeatedly in regulated industries—especially financial services, healthcare, and HR tech—where AI decisions directly affect people and revenue.

Common Mistakes

-

Treating AI governance as legal-only

-

Assuming transparency tools equal visibility

-

Ignoring business context

-

Overengineering explanations

-

Waiting for regulation to force action

-

Neglecting shadow AI

2026 Trends

-

Decision accountability over technical perfection

-

Boards demanding AI literacy

-

Governance for generative agents

-

AI visibility software as a unified platform (Collibra, OneTrust AI, IBM OpenScale)

FAQs

Q1. What is AI governance in a business context?

AI governance in a business context ensures that AI systems align with business goals, risk tolerance, and accountability, going beyond purely technical performance. It covers decision ownership, compliance, and explainability, helping executives make informed strategic choices.

Q2. Why is strategic visibility important for AI in enterprises?

Strategic visibility enables executives to trust AI decisions, respond quickly to regulatory requirements, and accelerate decision-making. It ensures AI actions are transparent, auditable, and aligned with business objectives, reducing risk and increasing adoption across the organization.

Q3. How does AI governance differ from traditional IT governance?

Unlike IT governance, which focuses on uptime, system security, and infrastructure, AI governance emphasizes decision impact, explainability, ethics, and business outcomes. It connects AI performance to strategic objectives and regulatory compliance, making AI actionable for executives.

Q4. What is the 10–20–70 rule in AI adoption?

The 10–20–70 rule is a 2026 benchmark for AI success: 10% algorithms, 20% technology, and 70% people and processes. It highlights that AI ROI depends more on organizational readiness and governance than on technical accuracy alone.

Q5. How can companies improve AI visibility?

Organizations can enhance AI visibility by:

-

Mapping AI systems to business objectives

-

Assigning clear ownership and accountability

-

Documenting decisions, risks, and audit trails

-

Conducting regular governance reviews

This ensures AI outputs are transparent, explainable, and strategically aligned.

Q6. Does strategic visibility slow AI innovation?

No. Properly implemented strategic visibility reduces operational friction, mitigates risk, and accelerates adoption. By providing clarity and accountability, it empowers teams to innovate confidently and safely.

Conclusion

AI governance works only when leaders see and understand AI decisions. Strategic visibility connects governance to ROI, mitigates risk, and fosters trust. In 2026, executive line of sight—not AI itself—is the differentiator. Organizations implementing BC-AIG with visibility dashboards, agentic oversight, and evidence-ready Model Passports will scale AI confidently and defensibly.

Related: Hyper-Personalized Shopping 2026: How AI Predicts Buyer Needs Without Hurting Trust

| Disclaimer: This article is for informational and educational purposes only and does not constitute legal, financial, or professional advice. Organizations should consult qualified experts before making decisions related to AI governance, regulatory compliance, or business strategy. The authors and publisher are not responsible for any actions taken based on the content of this article. |